Introduction

The bootstrap is a Monte Carlo strategy being employed in statistical analysis with growing popularity. This growing popularity among the practitioners is due to a couple of reasons. First, it helps overcome the small sample problem. As most econometric critical values derive from asymptotic distribution, it is often difficult to reconcile the small sample used in the estimation with these asymptotic results when testing hypotheses. Secondly, the approach is non-parametric in the sense that it is not based on a priori assumption about the distribution. The distribution is data-based and therefore bespoke to the internal consistency of the data used. In some cases, the analytical results are difficult to derive. Thus, when asymptotic critical values are difficult to justify because the observations are small, or the distributional assumptions underlying the results are questionable, one can instead employ bootstrap approach.

If you bootstrap, it figuratively means you are helping yourself out from the quicksand all by yourself, using the strap of your boots to lift yourself out. The same way, the approach does not require those stringent assumptions that clog the wheel of analysis. Thus, the bootstrap uses available data as the basis for computing the critical values through the process of sampling the data with replacement. The process of sampling this way is iterated many times, each iteration using the same length of observations as the original data. Each observation in the sample has equal chance of being included during each iteration. The sampled data, also called pseudo or synthetic data, are used to perform the analysis. The result is a collection of statistics or values that mimicks the distribution from where the observed data come from.

How it works...

The generic procedure to carrying out bootstrap simulation is as follows:

Suppose one has \(n\) observations \(\{y_i\}_{i=1}^n \) , and is interested in computing statistics \(\tau_n\). Let \(\hat{\tau}_n\) be its estimate using \(n\) observations. Now, assume one samples with replacement \(n\) observations. At a given iteration, the possibility of some of these observations being sampled more than once while some others won't be sampled is there. But if one drags the chain long enough repeating the iterations, hopefully all of them will be included. Then the set of statistics based on this large number of iterations, say B, is represented as\[\hat{\tau}_n^1, \cdots,\hat{\tau}_n^B\]Basic statistics makes us understand that if it's a statistic, then it must have a distribution. The above is the distribution using the bootstrap strategy. One can then compute all manners of statistics of interest: mean, median, variance, standard deviation etc. In fact, one can decide to plot the graph to see what the distribution is like.

Within the regression analysis, the same steps are involved although the focus is now on the parameters and the restrictions derived from them. To concretize the analysis, I suppose we have the following model:\[y_t=\alpha + \theta_1 y_{t-1} + \theta_2 y_{t-2}+ \theta_3 y_{t-3} +\eta_1 x_{t-1} + \eta_2 x_{t-2}+ \eta_3 x_{t-3} +\epsilon_t\] This equation can be viewed as the y-equation of a bivariate VAR(3) model. A natural exercise in this context is the non-causality test. For this, one can set up the null hypothesis that\[H_0: \eta_1=\eta_2=\eta_3=0\]Of course in Eviews this is easily carried out. After estimation, click on the View tab and hover to Coefficient Diagnostics. Follow the right arrow and click on Wald Test - Coefficient Restrictions.... You'll be prompted by a dialog box. There, you can input the restriction as

C(5)=C(6)=C(7)=0

The critical values in this case are based on asymptotic distribution of \(\chi^2\) or \(F\) depending on which distribution is chosen. Indeed, the two of them are related. However, they may not give appropriate answers either because they are asymptotic or because parametric assumptions are made.

Bootstrapping the regression model

You can use bootstrap strategy instead. This is how you can proceed. Using the model above, for example, you can

- Estimate the model and obtain the residuals;

- De-mean the residuals by subtracting the mean of the residuals. The reason for this is to ensure that the residuals are somewhat centered around zero, the same way that the random errors they represent center around zero. That is, \(\epsilon_t\sim N(0,\sigma^2)\). Let the (centered) residuals be represented as \(\epsilon_t^*\);

- Using the centered residuals and conditional on the estimated parameters, reconstruct the model as \[y_t^*=\hat{\alpha} + \hat{\theta_1} y_{t-1}^* + \hat{\theta}_2 y_{t-2}^*+ \hat{\theta}_3 y_{t-3}^* +\hat{\eta}_1 x_{t-1} + \hat{\eta}_2 x_{t-2}+ \hat{\eta}_3 x_{t-3} +\epsilon_t^*\]where the hat symbolizes the estimated values in Step 1. In this particular exercise, the process is recursively carried out because of the lags of the endogenous variables among the regressors. Also note that the process is initialized by setting the \(y_0=y_{-1}=y_{-2}=0\). In static models, it is sufficient to substitute the estimated coefficients and the exogenous regressors. This is the stage where the (centered) residuals are sampled without replacement;

- Using the computed pseudo data for endogenous variable, \(y_t^*\), estimate the model: \[y_t^*=\gamma + \mu_1 y_{t-1}^* + \mu_2 y_{t-2}^*+ \mu_3 y_{t-3}^* +\zeta_1 x_{t-1} + \zeta_2 x_{t-2}+ \zeta_3 x_{t-3} +\xi_t\]

- Set up the restriction, C(5)=C(6)=C(7)=0, test the implied hypothesis, and save the statistic;

- Repeat Steps 3-5 B times. I suggest 999 times.

These are the steps involved in bootstrap simulation. The resulting distribution can then be used to decide whether or not there is a causal effect from variable \(x_t\) to variable \(y_t\). Of course, you can compute various percentiles. For \(\chi^2\) or \(F\), whose domain is positive, the critical levels can be computed for 1, 5 and 10 percent by issuing @quantile(wchiq,\(\tau)\) where wchiq is the vector of 999 statistics and \(\tau=0.99\) for 1 percent, \(\tau=0.95\) for 5 percent and \(\tau=0.90\) for 10 percent. What @quantile() internally does is order the elements of the vector and then select the corresponding values for the percentiles of interest. In the absence of this function, which is inbuilt in Eviews, you can therefore manually order the elements from the lowest to the highest and then select the values that correspond to the respective percentile positions.

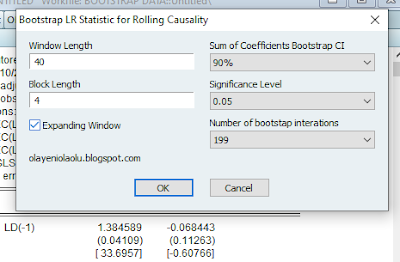

The Eviews addin, which can be downloaded here, carries out all the steps listed above for LS, ARDL, BREAKLS, THRESHOLD, COINTREG, VARSEL and QREG methods. It works directly with the equation object. This means you will first estimate your model just as usual and then run the addin on the estimated model equation. In what follows I show you how this can be used with two examples.

An example...

I estimate the break least square model: LD C LD(-1 to -2) LE(-1 to -2). This is with a view to testing the symmetry of causal effect across the regimes. Four regimes are detected using the Bai-Perron L+1 vs L sequentially determined breaks approach. The break dates are 1914Q2, 1937Q1 and 1959Q2. The coefficients of the lagged LE for the first regime are C(4) and C(5) and for the second regime they are C(9) and C(10). The third and the fourth regimes have C(14) and C(15), and C(19) and C(20) respectively. The restriction we want to test is whether the causal effects are similar across these four regimes. The causal effect is the sum of the estimated coefficients in each regime. Thus, for this restriction, we test the following hypothesis, the rejection of which will indicate there are asymmetric causal effects across the regimes:

C(4)+C(5)=C(9)+C(10)=C(14)+C(15)=C(19)+C(20)

The model is estimated and the output is in Figure 1.

Figure 1

After estimating the model, you can access the addin from the Proc tab where you can locate Add-ins. Follow the right arrow to locate

Bootstrap MC Restriction Simulation. This is shown in Figure 2. If you have other addins for equation, they'll be listed here.

Figure 2

In Figure 3, the Bootstrap Restrictions dialog box shows up. You can interact with the different options and prompts. In the edit box, you could input the restrictions just as you would do using the Eviews Wald test environment. Four options are listed under the Bootstrap Monte Carlos, where the default is Bootstrap. The number of iterations can be selected. There are three of them: 99 (good for overviewing the preliminary results), 499 and 999. If you want the graph generated, then you can check the Distribution graph. Lastly, follow me @ olayeniolaolu.blogspot.com also stares at you with💗.

Figure 3

In Figure 4, I input the restriction discussed above and also check Distribution graph because I want one (who would not want that?).

Figure 4

The result is a graph reported in Figure 5. The computed value is in the acceptance region and so we cannot reject the null hypothesis that there is no causal effect across the four regimes.

Figure 5

Another example...

Consider an ARDL(3,2) model. The model is given by \[ld_t=\alpha+\sum_{j=1}^3\theta_j ld_{t-j}+\sum_{j=0}^2\eta_j le_{t-j}+\xi_t\]This model can be reparameterized as \[ld_t=\gamma+\beta le_t +\sum_{j=1}^3\theta_j ld_{t-j}+\sum_{j=0}^1\eta_j \Delta le_{t-j}+\xi_t\]The long-run relationship is then stated as\[ld_t=\mu +\varphi le_t+u_t\]where \(\mu=\gamma(1-\sum_{j=1}^3\theta_j)^{-1}\) and \(\varphi=\beta(1-\sum_{j=1}^3\theta_j)^{-1}\).

To estimate this model, I use the reparameterized version and then input the following expression using the LS (note!) method:

ld c le ld(-1 to -3) d(le) d(le(-1))

From the same equation output, we want to compute the distributions for the long-run parameters \(\mu\) and \(\varphi\). For the intercept (\(\mu\)), I input the following restriction

C(1)/(1-C(3)-C(4)-C(5))=0

while for the slope (\(\varphi\)), I input the following restriction

C(2)/(1-C(3)-C(4)-C(5))=0

Although the addin does not plot the graphs for these distributions, the vectors of their respectively values are generated and stored in the workfile. Therefore, you can work on them as you desire. In this example, I report the distributions of the two estimates of the long-run coefficients in Figures 6 and 7.

Figure 6

Figure 7

You can use this estimate for bias correction if there are reasons to suspect overestimation or underestimation of intercept and slope.

Working with Output

Apart from plotting the graphs, which you can present in your work, the addin gives you access to the simulated results in vectors. The three vectors that you will have after testing your restriction are bootcoef##, boottvalue## and f_bootwald##, where ## indicates the precedence number appended to every instance of the objects generated after the first time. The first reports the vector of estimates of coefficient if only one restriction is involved. The hypothesis involves only one restriction if there is only one equality (=) sign in it. boottvalue is the vector of the corresponding value in this case. f_bootwald refers to the F statistic for a hypothesis having more than one restriction. It reports the joint test. You can use these for specific, tailor-made, analysis in your research. Let me take you through one that may interest you. Can we compute the bootstrap confidence interval for the restriction tested previously? I suggest you do it for the restricted coefficient. For you to do this, you need both the mean value and standard deviation from the distribution, the latter depending on the mean value. But you don't need to go through that route because Eviews has inbuilt routines that help deliver a good number of statistics for everyday use. So you can compute the mean value directly using the following:

sdev=@stdev(bootcoef)

This routine computes the standard deviation given by:\[\hat{SE}(\hat{\tau}^b)=\left[\frac{1}{B-1}\sum_{b=1}^B (\hat{\tau}^b-\bar{\hat{\tau}}^b)^2\right]^{1/2}\]where the mean value is given by\[\bar{\hat{\tau}}^b=\frac{1}{B}\sum_{b=1}^B \hat{\tau}^b\]The following code snippet will do the bootstrap confidence interval:

scalar meanv=@mean(bootcoef)

scalar sdev=@stdev(bootcoef)

scalar z_u=@quantile(bootcoef, 0.025)

scalar z_l=@quantile(bootcoef, 0.925)

scalar lowerbound=meanv-z_l*sdev

scalar upperbound=meanv-z_u*sdev

Using this code, you will find the lower bound to be 0.208 and the upper bound to be 0.466, while the mean value will be 0.439.

Perhaps you are interested further in robustness check. The bootstrap-t confidence interval can be computed. Use the following code snippet:

scalar meanv=@mean(bootcoef)

scalar sdev=@stdev(bootcoef)

vector zboot=(bootcoef-meanv)/sdev

scalar t_u=@quantile(zboot, 0.025)

scalar t_l=@quantile(zboot, 0.925)

scalar lowerbound=meanv-t_l*sdev

scalar upperbound=meanv-t_u*sdev

For more Eviews statistical routines that you can use for specific analysis, look them up here. Again, this addin can be accessed here. The data used can be accessed here as well.

Glad that you've followed me to this point.