Introduction

The Augmented ARDL is an approach designed to respond to the question of whether or not the dependent variable should be either I(0) or I(1). With I(0) as the dependent variable, it is difficult to infer long-run relationship between the dependent variable and the regressor(s) even if the F-statistic is above upper critical bound in the well used bounds-testing procedure. The reason is that, in the event that the I(0) is used as the dependent variable, the series will necessarily be stationary (Permit my tautological rigmarole)! This means in jointly testing for long-run relationship via F statistic, the fact that the computed F value is above the upper bound might just reflect the I(0)-ness of the dependent variable. What is more? Other exogenous variables may turn out to be insignificant, suggesting without testing the I(0) dependent variable along with them, the resulting t statistic (if there is only one exogenous variable) or F statistic (if there are more than one exogenous variable) becomes insignificant. Thus, the I(0) variable in the joint relationship may dominate whether or not other variables are significantly contributing to the long-run relationship. The result is always wrong inference.ARDL at a glance

While the PSS-ARDL approach is a workhorse for estimating and testing for long-run relationship under the joint occurrence of I(0) and I(1) variables, there are certain assumptions the applied researchers often take for granted thereby violating the conditions necessary for using the PSS-ARDL in the first place. For a bivariate specification, the PSS-ARDL(p,q), in its most general form, is given by\[\Delta y_t=\alpha+\beta t+\rho y_{t-1}+\gamma x_{t-1}+\sum_{j=1}^{p-1}\delta_j\Delta y_{t-j}+\sum_{j=0}^{q-1}\theta_j\Delta x_{t-j} +z_t^\prime\Phi+\epsilon_t\]where \(z_t\) represents the exogenous variables and could contain other deterministic variables like dummy variables and \(\Phi\) is the vector of the associated parameters. Based on this specification, Pesaran et al., (2001) highlight five different cases for bounds testing, each informing different null hypothesis testing. Although some of them are less interesting because they have less practical value, it is instructive to be aware of them:- CASE 1: No intercept and no trend

- CASE 2: Restricted intercept and no trend

- CASE 3: Unrestricted intercept and no trend

- CASE 4: Unrestricted intercept and restricted trend

- CASE 5: Unrestricted intercept and unrestricted trend

Intercept or trend is restricted if it is included in the long-run or levels relationship. For each of these cases, Pesaran et al., (2001) compute the associated t- and F-statistic critical values. These critical values are reported in that paper and readers are invited to consult the paper to obtain the necessary critical values (if you want since these values are reported pro bono).

The cases above correspond to the following restrictions on the model:

- CASE 1: The estimated model is given by \[\Delta y_t=\rho y_{t-1}+\gamma x_{t-1}+\sum_{j=1}^{p-1}\delta_j\Delta y_{t-j}+\sum_{j=0}^{q-1}\theta_j\Delta x_{t-j} +z_t^\prime\Phi+\epsilon_t\] and the null hypothesis is \(H_0:\rho=\gamma=0\). This model is recommended if the series have been demeaned and/or detrended. Absent these operations, it should not be used for any analysis except the researcher is strongly persuaded that it is the most suitable for the work or simply for pedagogical purposes.

- CASE 2: The estimated model is \[\Delta y_t=\alpha+\rho y_{t-1}+\gamma x_{t-1}+\sum_{j=1}^{p-1}\delta_j\Delta y_{t-j}+\sum_{j=0}^{q-1}\theta_j\Delta x_{t-j} +z^\prime_t\Phi+\epsilon_t\]where in this case \(\beta=0\). The null hypothesis \(H_0:\alpha=\rho=\gamma=0\). The restrictions imply that both the dependent variable and the regressors are moving around their respective mean values. Think of the parameter \(\alpha\) as \(\alpha=-\rho\zeta_y-\gamma\zeta_x\), where \(\zeta_i\) are the respective mean values or the steady state values to which the variables gravitate in the long run. Substituting this restriction into the model, we have \[\Delta y_t=\rho(y_{t-1}-\zeta_y)+\gamma (x_{t-1}-\zeta_x)+\sum_{j=1}^{p-1}\delta_j\Delta y_{t-j}+\sum_{j=0}^{q-1}\theta_j\Delta x_{t-j} +z^\prime_t\Phi+\epsilon_t\]This model therefore possesses some practical values and is suitable for modelling the behaviour of some variables in the long run. However, because the dependent variable do not possess the trend due to the absence of intercept in the short run, this specification's utility is limited given that most economic variables are I(1).

- CASE 3: The estimated model is the same as in CASE 2 with \(\beta=0\). However, \(H_0:\rho=\gamma=0\). This implies the intercept is pushed into the short-run relationship and it means the dependent variable has a linear trend, trending upwards or downwards depending on the direction dictated by \(\alpha\). This characteristic is benign if the dependent variable is really having the trend in it. However, this is not a feature of I(0) dependent variable. As most macroeconomic variables are I(1), this specification is often recommended. In Eviews, it's the default setting for model specification.

- CASE 4: The model estimated for CASE 4 is the full model. Here, trend is restricted while the intercept is unrestricted. The null hypothesis is therefore \(H_0:\beta=\rho=\gamma=0\). This specification suggests that the dependent variable is trending in the long run. If, in the long run, the dependent variable is not trending, it means this specification might just be a wrong choice to model the dependent variable.

- The last case where both the intercept and the trend are unrestricted is a perverse description of the macroeconomic variables. It is a full model but it means the dependent variable is trending quadratically. This does not fit most cases and is rarely used. The null hypothesis is \(H_0:\rho=\gamma=0\).

Getting More Gist about ARDL from ADF

The F statistic for bounds testing referred to above is necessary, but it is not sufficient, to detect whether or not there is long run relationship between the dependent variable and the regressors. The reason for this is the presence of both I(0) and I(1) and their treatment as the dependent variable in the given model. Note that one of the requirements for valid inference about the existence of cointegration between the dependent and the regressors is that the dependent variable must be I(1). We can get the gist of this point by looking more closely at the relationship between the ARDL and the ADF model. You may be wondering why the dependent variable must be I(1) in the ARDL model specification. The first thing to observe is that ARDL is a multivariate formulation of the augmented Dickey Fuller (ADF). Does that sound strange?Suppose \(H_0: \gamma=\theta_0=\theta_1=\cdots=\theta_q=0\), that is, the insignificance of other exogenous variables in the model, cannot be rejected. Then the model reduces to the standard ADF. From this, we can see that if \(\rho\) is significantly negative, stationarity is established. If this is the case, variable \(y_t\) will be reckoned as I(0). Thus, the ADF is given by\[\Delta y_t=\alpha+\rho y_{t-1}+\sum_{j=1}^{p-1}\delta_j\Delta y_{t-j}+\epsilon_t\]The fact that \(y_t\) is stationary at levels means that \(\rho\) must be significant whether or not the coefficients on other variables are significant. Therefore, in a test involving this I(0) variable as a dependent variable and possibly I(1) as independent variable(s), and where the coefficient on the latter is found to be insignificant, it's still possible to find cointegration not because there is one between these variables, but because the significance of the (lag of) dependent variable dominates the joint test and because only a subset of the associated alternative hypothesis is being considered. This is what the bounds testing does without separating the significance of \(\rho\) and \(\gamma\). Note that F test for bounds testing is based on the joint significance of these parameters. However, the joint test of \(\rho\) and \(\gamma\) does not tell us about the significance of \(\gamma\).

Degenerate cases

How then can we proceed here? More tests needed. To find out how, we must first realize what the issues are really like in this case. At the center of this are the two cases of degeneracy. They arise because the bounds testing (a joint F test) involves both the coefficient on the lagged dependent variable \(\rho\) in the model above and the coefficients of lagged exogenous variables. Although PSS reported the t statistic for \(\rho\) separately with a view to having robust inference, not only do the researchers often ignore it, the t statistic so reported along with the F statistic is not enough to avoid the pitfall. In short, the null hypothesis for the bounds testing \(H_{0}: \rho=\gamma=0\) can be seen as a compound one involving \(H_{0,1}: \rho=0\) and \(H_{0,2}: \gamma=0\). So rejection of either is not a proof of cointegration. This is because the alternative is not just \(H_{0}: \rho\neq\gamma\neq 0\) as often assumed in application; the alternative instead involves \(H_{0,1}: \rho\neq0\) and \(H_{0,2}: \gamma\neq0\) as well. In other words, a more comprehensive hypothesis testing procedure must involve the null hypotheses of these alternatives. Thus, we have the following null hypotheses:- \(H_{0}: \rho=\gamma= 0\), and \(H_{1,1}: \rho\neq0\), \(H_{1,2}: \gamma\neq0\)

- \(H_{0,1}: \rho=0\) and \(H_{1,1}: \rho\neq0\)

- \(H_{1,2}: \gamma=0\) and \(H_{1,2}: \gamma\neq0\)

Taxonomies of Augmented Bounds Test

Therefore, we state the following taxonomy for testing hypothesis:

- if the null hypotheses (1) and (2) are not rejected but (3) is, we have a case of degenerate lagged independent variable. This case implies absence of cointegration;

- if the null hypotheses (1) and (3) are not rejected but (2) is, we have a case of degenerate lagged dependent variable. This case also implies absence of cointegration; and

- if the null hypotheses (1), (2) and (3) are rejected, then there is cointegration

We now have a clear roadmap to follow. What this implies is that one needs to augment the testing as stated above. Hence the augmented ARDL testing procedure. With this procedure for testing for cointegration, it is no longer an issue whether or not the dependent variable is I(0) or I(1) as long as all the three null hypotheses are rejected.

Now the Eviews addin...

First note that this addin has been written in Eviews 12. Its functionality in lower version is therefore not guaranteed.

Using Eviews for testing this hypothesis should be straightforward but may be laborious. Eviews can help you here. All that is needed is reporting all the three cases noted above as against the two cases reported in Eviews. The following addin helps you with all the computations you might need to do.

To use it, just estimate your ARDL model as usual and then use the Proc tab to locate the add ins. In Figure 1, we have the ARDL method environment. Two variables are included. I choose the maximum lag of eight because I have enough quarterly data, 596 observations in total.

Figure 1

Once the model is estimated, use the Proc tab to locate Add-ins as shown in Figure 2

Figure 2

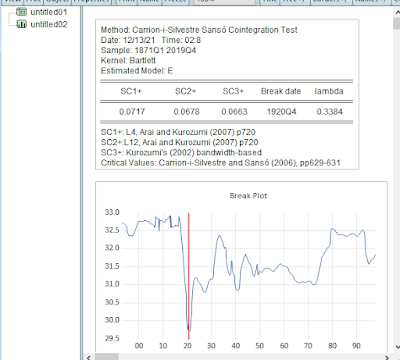

Click on Augmented ARDL Bound Test and you will have the figure referred to in Figure 3. The tests are reported underneath what you see here. Just scroll down to look them up.

Figure 3

Figure 4

What is shown in Figure 4 should be the same as reported natively by Eviews. The addition that has been appended by this addin is the Exogenous F-Bounds Test shown in Figure 5. For the confirmation of the test, we append the Wald test for exogenous variables in the spool. It comes under the title exogenous_wald_table. You can click to view it.

Figure 5

In this example, we are sure of cointegration because all the three computed statistics are above the upper bound, suggested no case of degeneracy is lurking in our results.

Note the following...

Before working on the ARDL output, be sure to name it. At the moment, if the output is UNTITLED, Error 169 will be generated. The glitch is a really slippery error. It will be corrected later.

The results have the fill of the existing table for bounds testing in Eviews but have been appended with the tests for the exogenous variables. The F statistic is used for testing the exogenous variables. Thus, we have the section for Overall F-Bounds Test which is the Null Hypothesis (1) above; the section for the t-Bounds Test which is the Null Hypothesis (2); and, the section for the Exogenous F-Bounds Test which is the Null Hypothesis (3). The first two of these sections should be the same as in the native Eviews report. The last is an addition based on the paper by Sam, McNown and Goh (2018).

From the application point of view, in this case, the Exogenous F-Bounds test for Cases 2 and 3 are the same:

CASE 2: Restricted intercept and no trend CASE 3: Unrestricted intercept and no trend

just as Cases 4 and 5 are the same:

CASE 4: Unrestricted intercept and restricted trend CASE 5: Unrestricted intercept and unrestricted trend

Therefore, the same critical values are reported for them in the literature. Thus, in the Eviews addin the long run for both cases are reported.

The link to the addin is here. The data used in this example is here.

Thank you for reading a long post😀.